President Obama ordained a mega project for the creation of the most powerful computer in the world, for to regain the site the EE.UU. had lost at China’s Tianhe- 2. But the ultimate objective is strategic. “Who doesn’t compute, does not compete”.

The first to give a strike was the URSS with the Sputnik, the first satellite orbiting the planet back in 1957. After, it was the turn of the dog Laika and the cosmonaut Yuri Gagarin. During the years of the spatial race, in the middle of the Cold War, it seemed the soviets were winning the dispute and rubbing their scientific superiority and military power on the world’s face. Until the EE.UU. – tired of being a runner up- broke the mold of everything

possible with the Apollo 11, Neil Armstrong and the Moon. Half a century afterwards, the URSS no longer exists, but China does, which defies the

EE.UU. in the map of the super potencies. And the technology race no longer takes place in space, but in the world of silicon, chips and computing capacity: the development of High Performance Computing (HPC), or simply, supercomputing, has become one of the issues of most strategic importance for these countries, that compete for the creation of the most powerful machines in the world.

Barack Obama is the JFK of this competition. The North-American president signed in July an executive order for the creation of the National Strategic Computing Initiative, a multi million society between the Defense Department, the Energy Department and the National Science Foundation whose purpose is to ensemble the fastest supercomputer in the world, surpassing the Tianhe-2 developed by the Technology of Defense University in China, that possess the title today.

Obama, in reality, wants to make it to the Moon and maybe a bit further away. The project seeks to develop the first supercomputer of the era of the exaflops, which is, able to process a trillion of operations by second. To put this in context, we are talking about a billion of billion of calculations or a 1 followed by 18 zeros: 1.000.000.000.000.000.000.

With a machine like this EE.UU. will regain much more than its pride, because it would be close to 30 times faster than Tianhe-2, that runs today at 33.86 petaflops (the level under that of the exaflops scale), this means, it performs 33.860 billions of operations per second. Although scientists get irritated by the comparison- for is inaccurate, due to the various factors that influence in the speed of a dispositive-, it could be said that the capacity of the Chinese supercomputer equals the one of an array of 20 million of home laptops and almost double the power of the computer that is behind it in the list, the North-American Titan, of 17.6 petaflops.

Although details of the North-American project are not revealed yet, there’s clues: in the beginning of the year, the firms Intel and Cray won a US$200 million contract to build a computer of 180 petaflops in 2018. The investment to reach the exaflops could easily exceed the US$3.000 millions.

Scientists worldwide are rubbing hands with the perspectives of this competition.

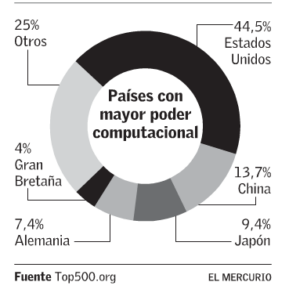

CHINA’S TIANHE-2 is today’s fastest computer. But EE.UU. has combined capacity.

Many say, “Who doesn’t compute does not compete”. Today it is necessary to have that computational power in order to compete as a world potency, and that the UU.SS. responds to the fact that China has today the biggest supercomputer is proof of that. Today, also, to have a bigger computing power can be a defining factor for the progress of a country”, affirms Ginés Guerrero, deputy director of the National Laboratory for High Performance Computing (NLHPC), of the Center for Mathematical Modeling of Universidad de Chile, in charge of Leftraru, the biggest supercomputer available in the country.

Under the same line, he refers to the subject Robyn Grimes, Chief Scientific Assessor (CSA) of the British council, who was in Chile this week and met the project NLHPC. The British government sees this project, as one of strategic importance, totally. To have big computing capacities is today a national imperative to Great Britain”, assures this expert in nuclear energy, whose country is in the fifth place of the list of the fastest supercomputers

in the world.

In China’s case, they have a thirst to be recognized as a scientific nation, and are making progress in this sense. Part of that is to have a very high development in computational science: it is a way of announcing to the world to where they have made it”. The practical applications of HPC are almost unlimited, from drug research and “precision medicine”, passing from the most exact studies about climate change to research about the

secrets of the human brain. In the area of social science it can be useful to carry out finan cial predictions or for designing “intelligent cities”. And without doubt- and from there the interest from the Pentagon-it could have military purposes. Everything can be computable in these times of the so-called big data.

Supercomputers allow us to resolve complex problems, with many variables, that couldn’t be resolved before or that with ordinary computers could have taken thousands of years”, explains Guerrero, who uses as example the simulations that the pharmaceutics perform: “They want to see how a molecule interacts with a possible drug. With a supercomputer, they can simulate thousands of millions of compounds to see which ones work, instead of doing it in a test tube in the laboratory, which is very expensive”. In a hypothetical war, adds the scientist, a supercomputer could calculate where to drop a bomb analyzing factors such as the climate, the wind or the demography. “It can be applied in many fields really”. Today to have a supercomputer represents a real advantage”, insists. Grimes highlights that Great Britain, more than following in line with the aspiration of the

biggest or fastest supercomputer, is focused now on developing the most predictive algo rithms and programs. Nonetheless, says is enthusiastic with regard to the development of the North-American supercomputer because new technologies will be generated that will later be available to other countries, just as happened before with the advances of NASA or the Pentagon, that created in the 60’s Arpanet, predecessor of today’s internet.

“We all benefit from this competition” he notes.

Moore’s Law

One of the key subjects will be the energetic. A supercomputer, just like the one Obama wants, with today’s technology would need more than 500 megabytes for functioning, nearly a half of what a standard nuclear plant produces. Research on the refrigeration field will have to be conducted- these machines overheat a lot-, and, of course, keep on stretching the capacity and the miniaturization of processors and memory dispositives. In the

course of 50 years “the Law of Moore” has been proved. The one that says that computing capacity doubles every 18 months, but in the foreseen era of exaflops this rule could easily become obsolete.

In the other hand, if numbers are infinite ¿where will the supercomputing race end up? ¿Will it have a winner? “In this ‘war’ of supercomputing there ́s something different from the spatial race” says Guerrero. “In the spatial race there was a starting point and a finish point, that was making it to the Moon. But in this case there’s not an extreme final point in line, this is going to be a continuous progress. And it has been demonstrated that the more offer there is the more demand it gets”. Grimes gets emotional with the dilemma. “We get much debating on this. Computing capacity has been getting bigger in years and years, and we haven’t had any sign to show it will stop. But it will stop, it must stop, it will have to stop. It can’t go on forever. Although there’s no clues it is coming to any grinding halt”. And yes, the next step has a name already: the era of the “zettaflops”, a very short step for men, a big compute for humankind.

Leftraru: the Chilean project

The most powerful computer in Chile is called Leftraru and is managed by the National Laboratory for High Performance Computing (NLHPC), a unit under the charge of the Centre for Mathematical Modeling of the Universidad de Chile that has as associate institutions other 8 universities.

“With this supercomputer we are positioned second in Latin-America which is very good”, says Ginés Guerrero, NLHPC ́s deputy director. “We are very happy. Right now we have 2.640 computing nodes we can offer to the scientists in the country. The number we usually use is that Leftraru equals to 25.000 home laptops”. Just to draw a comparison, the most powerful computer in the world, the Chinese Tianhe-2, is 762 times faster than Lefraru. And the supercomputer project that plans Obama would be 22.500 times more powerful. “Compared to other computers it is left behind by far, but Leftraru can indeed offer more than enough computational power so that science in Chile can progress”, affirms Guerrero. “But we want to obviously grow”. Created with financial support from Conicyt, the NLHPC offers the supercomputer’s services free of charge to both the scientific and academic worlds. “Today, the most use of the machine is done in research in molecular dynamics, bioinformatics, climatology, quantum

chemistry and astrophysics.

“Now we are in the second phase of the project, to take it to the public sector and the industry”. What we want to do is to plant that little seed and see what things can be done with a supercomputer”, remarks Guerrero.

Source: El Mercurio.com